AI is coming for music, too

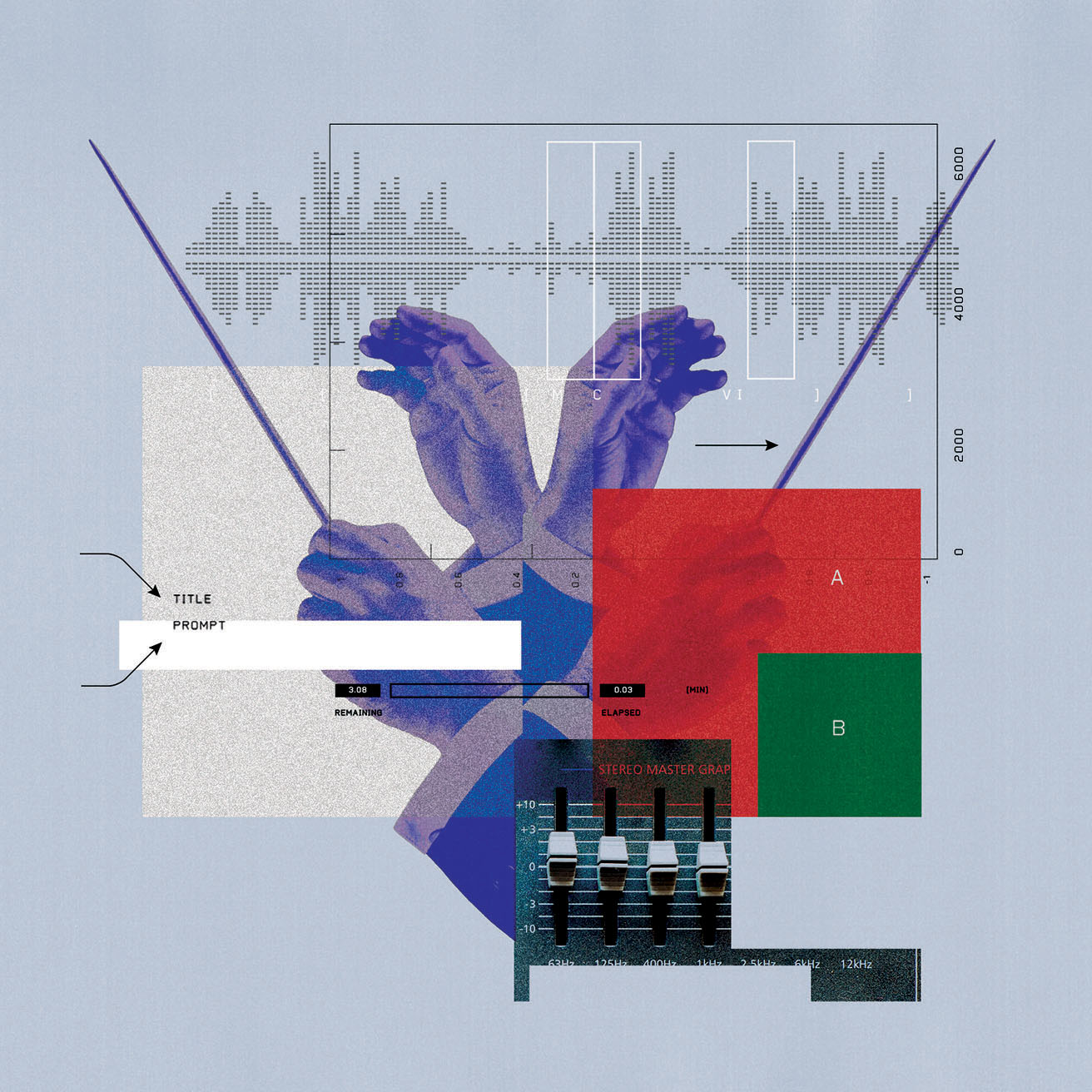

The top of this story contains samples of AI-generated music.

Synthetic intelligence was barely a time period in 1956, when prime scientists from the sphere of computing arrived at Dartmouth School for a summer season convention. The pc scientist John McCarthy had coined the phrase within the funding proposal for the occasion, a gathering to work by means of methods to construct machines that might use language, clear up issues like people, and enhance themselves. But it surely was a sensible choice, one which captured the organizers’ founding premise: Any characteristic of human intelligence might “in precept be so exactly described {that a} machine may be made to simulate it.”

Of their proposal, the group had listed a number of “points of the factitious intelligence drawback.” The final merchandise on their listing, and in hindsight maybe essentially the most troublesome, was constructing a machine that might exhibit creativity and originality.

On the time, psychologists had been grappling with methods to outline and measure creativity in people. The prevailing idea—that creativity was a product of intelligence and excessive IQ—was fading, however psychologists weren’t certain what to interchange it with. The Dartmouth organizers had considered one of their very own. “The distinction between inventive pondering and unimaginative competent pondering lies within the injection of some randomness,” they wrote, including that such randomness “have to be guided by instinct to be environment friendly.”

Practically 70 years later, following a variety of boom-and-bust cycles within the discipline, we now have AI fashions that roughly observe that recipe. Whereas massive language fashions that generate textual content have exploded within the final three years, a special kind of AI, primarily based on what are referred to as diffusion fashions, is having an unprecedented influence on inventive domains. By reworking random noise into coherent patterns, diffusion fashions can generate new photos, movies, or speech, guided by textual content prompts or different enter information. The very best ones can create outputs indistinguishable from the work of individuals, in addition to weird, surreal outcomes that really feel distinctly nonhuman.

Now these fashions are marching right into a inventive discipline that’s arguably extra susceptible to disruption than another: music. AI-generated inventive works—from orchestra performances to heavy metallic—are poised to suffuse our lives extra completely than another product of AI has executed but. The songs are more likely to mix into our streaming platforms, get together and marriage ceremony playlists, soundtracks, and extra, whether or not or not we discover who (or what) made them.

For years, diffusion fashions have stirred debate within the visual-art world about whether or not what they produce displays true creation or mere replication. Now this debate has come for music, an artwork kind that’s deeply embedded in our experiences, recollections, and social lives. Music fashions can now create songs able to eliciting actual emotional responses, presenting a stark instance of how troublesome it’s changing into to outline authorship and originality within the age of AI.

The courts are actively grappling with this murky territory. Main file labels are suing the highest AI music turbines, alleging that diffusion fashions do little greater than replicate human artwork with out compensation to artists. The mannequin makers counter that their instruments are made to help in human creation.

In deciding who is true, we’re pressured to assume onerous about our personal human creativity. Is creativity, whether or not in synthetic neural networks or organic ones, merely the results of huge statistical studying and drawn connections, with a sprinkling of randomness? If that’s the case, then authorship is a slippery idea. If not—if there’s some distinctly human ingredient to creativity—what’s it? What does it imply to be moved by one thing with no human creator? I needed to wrestle with these questions the primary time I heard an AI-generated track that was genuinely unbelievable—it was unsettling to know that somebody merely wrote a immediate and clicked “Generate.” That predicament is coming quickly for you, too.

Making connections

After the Dartmouth convention, its members went off in several analysis instructions to create the foundational applied sciences of AI. On the identical time, cognitive scientists had been following a 1950 name from J.P. Guilford, president of the American Psychological Affiliation, to sort out the query of creativity in human beings. They got here to a definition, first formalized in 1953 by the psychologist Morris Stein within the Journal of Psychology: Artistic works are each novel, that means they current one thing new, and helpful, that means they serve some goal to somebody. Some have referred to as for “helpful” to get replaced by “satisfying,” and others have pushed for a 3rd criterion: that inventive issues are additionally stunning.

Later, within the Nineties, the rise of useful magnetic resonance imaging made it potential to check extra of the neural mechanisms underlying creativity in lots of fields, together with music. Computational strategies prior to now few years have additionally made it simpler to map out the function that reminiscence and associative pondering play in inventive selections.

What has emerged is much less a grand unified idea of how a inventive concept originates and unfolds within the mind and extra an ever-growing listing of highly effective observations. We are able to first divide the human inventive course of into phases, together with an ideation or proposal step, adopted by a extra crucial and evaluative step that appears for benefit in concepts. A number one idea on what guides these two phases known as the associative idea of creativity, which posits that essentially the most inventive folks can kind novel connections between distant ideas.

“It could possibly be like spreading activation,” says Roger Beaty, a researcher who leads the Cognitive Neuroscience of Creativity Laboratory at Penn State. “You consider one factor; it simply type of prompts associated ideas to no matter that one idea is.”

These connections typically hinge particularly on semantic reminiscence, which shops ideas and info, versus episodic reminiscence, which shops recollections from a specific time and place. Lately, extra subtle computational fashions have been used to check how folks make connections between ideas throughout nice “semantic distances.” For instance, the phrase apocalypse is extra intently associated to nuclear energy than to celebration. Research have proven that extremely inventive folks could understand very semantically distinct ideas as shut collectively. Artists have been discovered to generate phrase associations throughout higher distances than non-artists. Different analysis has supported the concept inventive folks have “leaky” consideration—that’s, they typically discover info which may not be notably related to their rapid job.

Neuroscientific strategies for evaluating these processes don’t counsel that creativity unfolds in a specific space of the mind. “Nothing within the mind produces creativity like a gland secretes a hormone,” Dean Keith Simonton, a frontrunner in creativity analysis, wrote within the Cambridge Handbook of the Neuroscience of Creativity.

The proof as a substitute factors to a couple dispersed networks of exercise throughout inventive thought, Beaty says—one to assist the preliminary era of concepts by means of associative pondering, one other concerned in figuring out promising concepts, and one other for analysis and modification. A brand new research, led by researchers at Harvard Medical Faculty and revealed in February, means that creativity may even contain the suppression of explicit mind networks, like ones concerned in self-censorship.

Thus far, machine creativity—in the event you can name it that—seems fairly completely different. Although on the time of the Dartmouth convention AI researchers had been serious about machines impressed by human brains, that focus had shifted by the point diffusion fashions had been invented, a few decade in the past.

The very best clue to how they work is within the identify. When you dip a paintbrush loaded with crimson ink right into a glass jar of water, the ink will diffuse and swirl into the water seemingly at random, finally yielding a pale pink liquid. Diffusion fashions simulate this course of in reverse, reconstructing legible types from randomness.

For a way of how this works for photos, image a photograph of an elephant. To coach the mannequin, you make a replica of the picture, including a layer of random black-and-white static on prime. Make a second copy and add a bit extra, and so forth a whole lot of instances till the final picture is pure static, with no elephant in sight. For every picture in between, a statistical mannequin predicts how a lot of the picture is noise and the way a lot is admittedly the elephant. It compares its guesses with the correct solutions and learns from its errors. Over hundreds of thousands of those examples, the mannequin will get higher at “de-noising” the photographs and connecting these patterns to descriptions like “male Borneo elephant in an open discipline.”

Now that it’s been skilled, producing a brand new picture means reversing this course of. When you give the mannequin a immediate, like “a cheerful orangutan in a mossy forest,” it generates a picture of random white noise and works backward, utilizing its statistical mannequin to take away bits of noise step-by-step. At first, tough shapes and colours seem. Particulars come after, and eventually (if it really works) an orangutan emerges, all with out the mannequin “understanding” what an orangutan is.

Musical photos

The strategy works a lot the identical manner for music. A diffusion mannequin doesn’t “compose” a track the best way a band may, beginning with piano chords and including vocals and drums. As an alternative, all the weather are generated without delay. The method hinges on the truth that the various complexities of a track may be depicted visually in a single waveform, representing the amplitude of a sound wave plotted towards time.

Consider a file participant. By touring alongside a groove in a bit of vinyl, a needle mirrors the trail of the sound waves engraved within the materials and transmits it right into a sign for the speaker. The speaker merely pushes out air in these patterns, producing sound waves that convey the entire track.

From a distance, a waveform may look as if it simply follows a track’s quantity. However in the event you had been to zoom in intently sufficient, you can see patterns within the spikes and valleys, just like the 49 waves per second for a bass guitar enjoying a low G. A waveform incorporates the summation of the frequencies of all completely different devices and textures. “You see sure shapes begin happening,” says David Ding, cofounder of the AI music firm Udio, “and that type of corresponds to the broad melodic sense.”

Since waveforms, or comparable charts referred to as spectrograms, may be handled like photos, you may create a diffusion mannequin out of them. A mannequin is fed hundreds of thousands of clips of current songs, every labeled with an outline. To generate a brand new track, it begins with pure random noise and works backward to create a brand new waveform. The trail it takes to take action is formed by what phrases somebody places into the immediate.

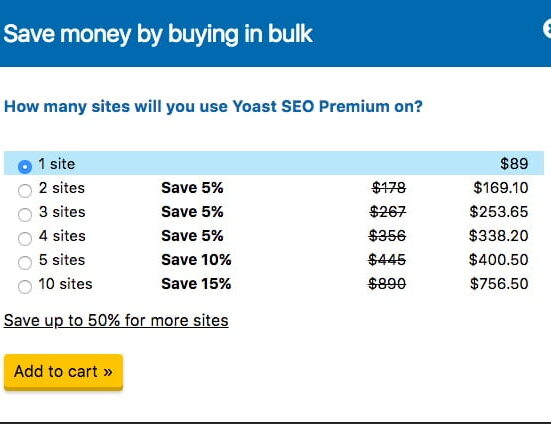

Ding labored at Google DeepMind for 5 years as a senior analysis engineer on diffusion fashions for photos and movies, however he left to discovered Udio, primarily based in New York, in 2023. The corporate and its competitor Suno, primarily based in Cambridge, Massachusetts, at the moment are main the race for music era fashions. Each intention to construct AI instruments that allow nonmusicians to make music. Suno is bigger, claiming greater than 12 million customers, and raised a $125 million funding spherical in Could 2024. The corporate has partnered with artists together with Timbaland. Udio raised a seed funding spherical of $10 million in April 2024 from distinguished buyers like Andreessen Horowitz in addition to musicians Will.i.am and Frequent.

The outcomes of Udio and Suno up to now counsel there’s a large viewers of people that could not care whether or not the music they take heed to is made by people or machines. Suno has artist pages for creators, some with massive followings, who generate songs fully with AI, typically accompanied by AI-generated photos of the artist. These creators are usually not musicians within the typical sense however expert prompters, creating work that may’t be attributed to a single composer or singer. On this rising house, our regular definitions of authorship—and our strains between creation and replication—all however dissolve.

The outcomes of Udio and Suno up to now counsel there’s a large viewers of people that could not care whether or not the music they take heed to is made by people or machines.

The music trade is pushing again. Each corporations had been sued by main file labels in June 2024, and the lawsuits are ongoing. The labels, together with Common and Sony, allege that the AI fashions have been skilled on copyrighted music “at an virtually unimaginable scale” and generate songs that “imitate the qualities of real human sound recordings” (the case towards Suno cites one ABBA-adjacent track referred to as “Prancing Queen,” for instance).

Suno didn’t reply to requests for touch upon the litigation, however in an announcement responding to the case posted on Suno’s weblog in August, CEO Mikey Shulman stated the corporate trains on music discovered on the open web, which “certainly incorporates copyrighted supplies.” However, he argued, “studying shouldn’t be infringing.”

A consultant from Udio stated the corporate wouldn’t touch upon pending litigation. On the time of the lawsuit, Udio launched an announcement mentioning that its mannequin has filters to make sure that it “doesn’t reproduce copyrighted works or artists’ voices.”

Complicating issues even additional is steering from the US Copyright Workplace, launched in January, that claims AI-generated works may be copyrighted in the event that they contain a substantial quantity of human enter. A month later, an artist in New York acquired what may be the primary copyright for a bit of visible artwork made with the assistance of AI. The primary track could possibly be subsequent.

Novelty and mimicry

These authorized circumstances wade right into a grey space just like one explored by different court docket battles unfolding in AI. At difficulty right here is whether or not coaching AI fashions on copyrighted content material is allowed, and whether or not generated songs unfairly copy a human artist’s type.

However AI music is more likely to proliferate in some kind no matter these court docket selections; YouTube has reportedly been in talks with main labels to license their music for AI coaching, and Meta’s latest growth of its agreements with Common Music Group means that licensing for AI-generated music may be on the desk.

If AI music is right here to remain, will any of it’s any good? Contemplate three elements: the coaching information, the diffusion mannequin itself, and the prompting. The mannequin can solely be pretty much as good because the library of music it learns from and the descriptions of that music, which have to be advanced to seize it nicely. A mannequin’s structure then determines how nicely it might use what’s been discovered to generate songs. And the immediate you feed into the mannequin—in addition to the extent to which the mannequin “understands” what you imply by “flip down that saxophone,” for instance—is pivotal too.

Is the consequence creation or just replication of the coaching information? We might ask the identical query about human creativity.

Arguably an important difficulty is the primary: How intensive and numerous is the coaching information, and the way nicely is it labeled? Neither Suno nor Udio has disclosed what music has gone into its coaching set, although these particulars will possible should be disclosed through the lawsuits.

Udio says the best way these songs are labeled is crucial to the mannequin. “An space of lively analysis for us is: How will we get increasingly refined descriptions of music?” Ding says. A primary description would determine the style, however then you can additionally say whether or not a track is moody, uplifting, or calm. Extra technical descriptions may point out a two-five-one chord development or a particular scale. Udio says it does this by means of a mixture of machine and human labeling.

“Since we wish to goal a broad vary of goal customers, that additionally implies that we’d like a broad vary of music annotators,” he says. “Not simply folks with music PhDs who can describe the music on a really technical stage, but in addition music fans who’ve their very own casual vocabulary for describing music.”

Aggressive AI music turbines should additionally study from a relentless provide of latest songs made by folks, or else their outputs can be caught in time, sounding stale and dated. For this, right now’s AI-generated music depends on human-generated artwork. Sooner or later, although, AI music fashions could prepare on their very own outputs, an strategy being experimented with in different AI domains.

As a result of fashions begin with a random sampling of noise, they’re nondeterministic; giving the identical AI mannequin the identical immediate will end in a brand new track every time. That’s additionally as a result of many manufacturers of diffusion fashions, together with Udio, inject extra randomness by means of the method—primarily taking the waveform generated at every step and distorting it ever so barely in hopes of including imperfections that serve to make the output extra fascinating or actual. The organizers of the Dartmouth convention themselves really useful such a tactic again in 1956.

In keeping with Udio cofounder and chief working officer Andrew Sanchez, it’s this randomness inherent in generative AI packages that comes as a shock to many individuals. For the previous 70 years, computer systems have executed deterministic packages: Give the software program an enter and obtain the identical response each time.

“A lot of our artists companions can be like, ‘Properly, why does it do that?’” he says. “We’re like, nicely, we don’t actually know.” The generative period requires a brand new mindset, even for the businesses creating it: that AI packages may be messy and inscrutable.

Is the consequence creation or just replication of the coaching information? Followers of AI music informed me we might ask the identical query about human creativity. As we take heed to music by means of our youth, neural mechanisms for studying are weighted by these inputs, and recollections of those songs affect our inventive outputs. In a latest research, Anthony Brandt, a composer and professor of music at Rice College, identified that each people and huge language fashions use previous experiences to judge potential future situations and make higher selections.

Certainly, a lot of human artwork, particularly in music, is borrowed. This typically ends in litigation, with artists alleging {that a} track was copied or sampled with out permission. Some artists counsel that diffusion fashions must be made extra clear, so we might know {that a} given track’s inspiration is three elements David Bowie and one half Lou Reed. Udio says there’s ongoing analysis to attain this, however proper now, nobody can do it reliably.

For nice artists, “there’s that mixture of novelty and affect that’s at play,” Sanchez says. “And I feel that that’s one thing that can be at play in these applied sciences.”

However there are many areas the place makes an attempt to equate human neural networks with synthetic ones rapidly crumble underneath scrutiny. Brandt carves out one area the place he sees human creativity clearly soar above its machine-made counterparts: what he calls “amplifying the anomaly.” AI fashions function within the realm of statistical sampling. They don’t work by emphasizing the distinctive however, fairly, by lowering errors and discovering possible patterns. People, however, are intrigued by quirks. “Somewhat than being handled as oddball occasions or ‘one-offs,’” Brandt writes, the quirk “permeates the inventive product.”

He cites Beethoven’s resolution so as to add a jarring off-key observe within the final motion of his Symphony no. 8. “Beethoven might have left it at that,” Brandt says. “However fairly than treating it as a one-off, Beethoven continues to reference this incongruous occasion in numerous methods. In doing so, the composer takes a momentary aberration and magnifies its influence.” One might look to comparable anomalies within the backward loop sampling of late Beatles recordings, pitched-up vocals from Frank Ocean, or the incorporation of “discovered sounds,” like recordings of a crosswalk sign or a door closing, favored by artists like Charlie Puth and by Billie Eilish’s producer Finneas O’Connell.

If a inventive output is certainly outlined as one which’s each novel and helpful, Brandt’s interpretation means that the machines could have us matched on the second criterion whereas people reign supreme on the primary.

To discover whether or not that’s true, I spent a number of days enjoying round with Udio’s mannequin. It takes a minute or two to generate a 30-second pattern, however if in case you have paid variations of the mannequin you may generate entire songs. I made a decision to select 12 genres, generate a track pattern for every, after which discover comparable songs made by folks. I constructed a quiz to see if folks in our newsroom might spot which songs had been made by AI.

The common rating was 46%. And for a number of genres, particularly instrumental ones, listeners had been flawed as a rule. Once I watched folks do the check in entrance of me, I seen that the qualities they confidently flagged as an indication of composition by AI—a fake-sounding instrument, a bizarre lyric—hardly ever proved them proper. Predictably, folks did worse in genres they had been much less conversant in; some did okay on nation or soul, however many stood no likelihood towards jazz, classical piano, or pop. Beaty, the creativity researcher, scored 66%, whereas Brandt, the composer, completed at 50% (although he answered appropriately on the orchestral and piano sonata exams).

Keep in mind that the mannequin doesn’t deserve all of the credit score right here; these outputs couldn’t have been created with out the work of human artists whose work was within the coaching information. However with only a few prompts, the mannequin generated songs that few folks would select as machine-made. Just a few might simply have been performed at a celebration with out elevating objections, and I discovered two I genuinely liked, at the same time as a lifelong musician and customarily choosy music particular person. However sounding actual shouldn’t be the identical factor as sounding unique. The songs didn’t really feel pushed by oddities or anomalies—actually not on the extent of Beethoven’s “leap scare.” Nor did they appear to bend genres or cowl nice leaps between themes. In my check, folks typically struggled to determine whether or not a track was AI-generated or just dangerous.

How a lot will this matter ultimately? The courts will play a job in deciding whether or not AI music fashions serve up replications or new creations—and the way artists are compensated within the course of—however we, as listeners, will determine their cultural worth. To understand a track, do we have to image a human artist behind it—somebody with expertise, ambitions, opinions? Is a superb track not nice if we discover out it’s the product of AI?

Sanchez says folks could surprise who’s behind the music. However “on the finish of the day, nevertheless a lot AI part, nevertheless a lot human part, it’s going to be artwork,” he says. “And persons are going to react to it on the standard of its aesthetic deserves.”

In my experiment, although, I noticed that the query actually mattered to folks—and a few vehemently resisted the thought of having fun with music made by a pc mannequin. When considered one of my check topics instinctively began bobbing her head to an electro-pop track on the quiz, her face expressed doubt. It was virtually as if she was making an attempt her greatest to image a human fairly than a machine because the track’s composer. “Man,” she stated, “I actually hope this isn’t AI.”

It was.

Leave feedback about this