Amazon Bedrock Mannequin Distillation: Enhance perform calling accuracy whereas decreasing price and latency

Amazon Bedrock Mannequin Distillation is mostly accessible, and it addresses the elemental problem many organizations face when deploying generative AI: how you can preserve excessive efficiency whereas decreasing prices and latency. This system transfers data from bigger, extra succesful basis fashions (FMs) that act as academics to smaller, extra environment friendly fashions (college students), creating specialised fashions that excel at particular duties. On this publish, we spotlight the superior information augmentation strategies and efficiency enhancements in Amazon Bedrock Mannequin Distillation with Meta’s Llama mannequin household.

Agent perform calling represents a essential functionality for contemporary AI functions, permitting fashions to work together with exterior instruments, databases, and APIs by precisely figuring out when and how you can invoke particular features. Though bigger fashions usually excel at figuring out the suitable features to name and setting up correct parameters, they arrive with increased prices and latency. Amazon Bedrock Mannequin Distillation now permits smaller fashions to realize comparable perform calling accuracy whereas delivering considerably quicker response occasions and decrease operational prices.

The worth proposition is compelling: organizations can deploy AI brokers that preserve excessive accuracy in instrument choice and parameter building whereas benefiting from the decreased footprint and elevated throughput of smaller fashions. This development makes subtle agent architectures extra accessible and economically viable throughout a broader vary of functions and scales of deployment.

Conditions

For a profitable implementation of Amazon Bedrock Mannequin Distillation, you’ll want to satisfy a number of necessities. We advocate referring to the Submit a mannequin distillation job in Amazon Bedrock within the official AWS documentation for probably the most up-to-date and complete info.

Key necessities embrace:

- An energetic AWS account

- Chosen trainer and scholar fashions enabled in your account (confirm on the Mannequin entry web page of the Amazon Bedrock console)

- An S3 bucket for storing enter datasets and output artifacts

- Acceptable IAM permissions:

- Belief relationship permitting Amazon Bedrock to imagine the function

- Permissions to entry S3 for enter/output information and invocation logs

- Permissions for mannequin inference when utilizing inference profiles

In the event you’re utilizing historic invocation logs, verify if mannequin invocation logging is enabled in your Amazon Bedrock settings with S3 chosen because the logging vacation spot.

Making ready your information

Efficient information preparation is essential for profitable distillation of agent perform calling capabilities. Amazon Bedrock offers two major strategies for making ready your coaching information: importing JSONL recordsdata to Amazon S3 or utilizing historic invocation logs. No matter which technique to decide on, you’ll want to arrange correct formatting of instrument specs to allow profitable agent perform calling distillation.

Device specification format necessities

For agent perform calling distillation, Amazon Bedrock requires that instrument specs be supplied as a part of your coaching information. These specs have to be encoded as textual content inside the system or person message of your enter information. The instance proven is utilizing the Llama mannequin household’s perform calling format:

This strategy lets the mannequin discover ways to interpret instrument definitions and make applicable perform calls primarily based on person queries. Afterwards, when calling inference on the distilled scholar mannequin, we advise holding the immediate format according to the distillation enter information. This offers optimum efficiency by sustaining the identical construction the mannequin was educated on.

Making ready information utilizing Amazon S3 JSONL add

When making a JSONL file for distillation, every document should observe this construction:

Every document should embrace the schemaVersion subject with the worth bedrock-conversation-2024. The system subject comprises directions for the mannequin, together with accessible instruments. The messages subject comprises the dialog, with required person enter and optionally available assistant responses.

Utilizing historic invocation logs

Alternatively, you should use your historic mannequin invocation logs on Amazon Bedrock for distillation. This strategy makes use of precise manufacturing information out of your utility, capturing real-world perform calling situations. To make use of this technique:

- Allow invocation logging in your Amazon Bedrock account settings, choosing S3 as your logging vacation spot.

- Add metadata to your mannequin invocations utilizing the

requestMetadatasubject to categorize interactions. For instance: - When creating your distillation job, specify filters to pick out related logs primarily based on metadata:

Utilizing historic invocation logs means which you can distill data out of your manufacturing workloads, permitting the mannequin to be taught from actual person interactions and performance calls.

Mannequin distillation enhancements

Though the fundamental course of for making a mannequin distillation job stays just like what we described in our earlier weblog publish, Amazon Bedrock Mannequin Distillation introduces a number of enhancements with normal availability that enhance the expertise, capabilities, and transparency of the service.

Expanded mannequin assist

With normal availability, we’ve got expanded the mannequin choices accessible for distillation. Along with the fashions supported throughout preview, prospects can now use:

- Nova Premier as a trainer mannequin for Nova Professional/Lite/Micro fashions distillation

- Anthropic Claude Sonnet 3.5 v2 as a trainer mannequin for Claude Haiku distillation

- Meta’s Llama 3.3 70B as trainer and three.2 1B and 3B as scholar fashions for Meta mannequin distillation

This broader choice permits prospects to search out the stability between efficiency and effectivity throughout totally different use instances. For probably the most present listing of supported fashions, discuss with the Amazon Bedrock documentation.

Superior information synthesis know-how

Amazon Bedrock applies proprietary information synthesis strategies in the course of the distillation course of for sure use instances. This science innovation robotically generates further coaching examples that enhance the coed mannequin’s capacity to generate higher response.

For agent perform calling with Llama fashions particularly, the info augmentation strategies assist bridge the efficiency hole between trainer and scholar fashions in comparison with vanilla distillation (vanilla distillation means immediately annotating enter information with trainer response and run scholar coaching with supervised fine-tuning). This makes the coed fashions’ efficiency far more corresponding to the trainer after distillation whereas sustaining the fee and latency advantages of a smaller mannequin.

Enhanced coaching visibility

Amazon Bedrock mannequin distillation now offers higher visibility into the coaching course of by way of a number of enhancements:

- Artificial information transparency – Mannequin distillation now offers samples of the synthetically generated coaching information used to boost mannequin efficiency. For many mannequin households, as much as 50 pattern prompts are exported (as much as 25 for Anthropic fashions), supplying you with perception into how your mannequin was educated, which will help assist inner compliance necessities.

- Immediate insights reporting – A summarized report of prompts accepted for distillation is supplied, together with detailed visibility into prompts that had been rejected and the particular purpose for rejection. This suggestions mechanism helps you establish and repair problematic prompts to enhance your distillation success fee.

These insights are saved within the output S3 bucket specified throughout job creation, supplying you with a clearer image of the data switch course of.

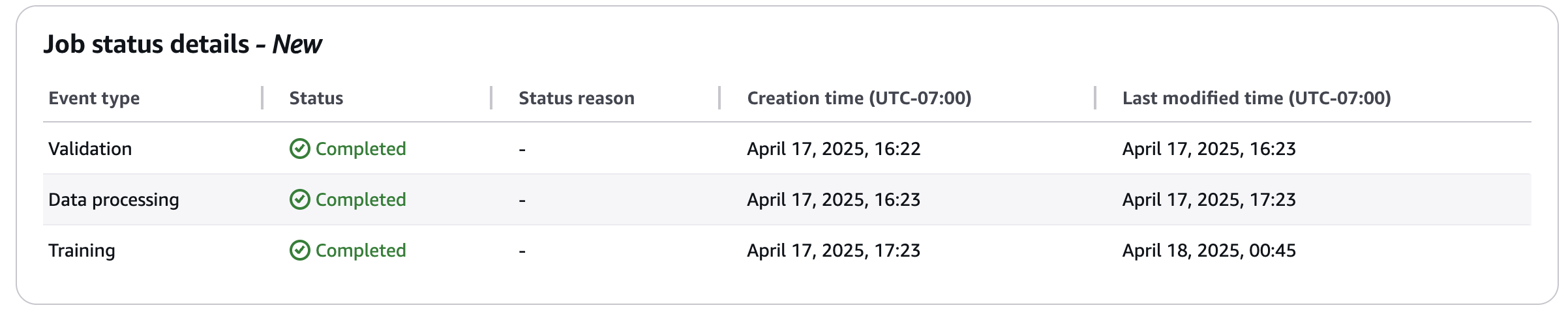

Improved job standing reporting

Amazon Bedrock Mannequin Distillation additionally provides enhanced coaching job standing reporting to supply extra detailed details about the place your mannequin distillation job stands within the course of. Slightly than temporary standing indicators comparable to “In Progress” or “Full,” the system now offers extra granular standing updates, serving to you higher monitor the progress of the distillation job.

You’ll be able to monitor these job standing particulars in each the AWS Administration Console and AWS SDK.

Efficiency enhancements and advantages

Now that we’ve explored the characteristic enhancements in Amazon Bedrock Mannequin Distillation, we study the advantages these capabilities ship, significantly for agent perform calling use instances.

Analysis metric

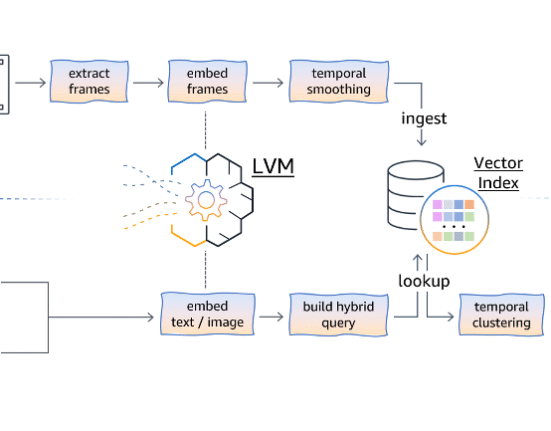

We use summary syntax tree (AST) to judge the perform calling efficiency. AST parses the generated perform name and performs fine-grained analysis on the correctness of the generated perform title, parameter values, and information varieties with the next workflow:

- Operate matching – Checks if the expected perform title is according to one of many potential solutions

- Required parameter matching – Extracts the arguments from the AST and checks if every parameter could be discovered and precise matched in potential solutions

- Parameter sort and worth matching – Checks if the expected parameter values and kinds are right

The method is illustrated in following diagram from Gorilla: Massive Language Mannequin Linked with Large APIs.

Experiment outcomes

To guage mannequin distillation within the perform name use case, we used the BFCL v2 dataset and filtered it to particular domains (leisure, on this case) to match a typical use case of mannequin customization. We additionally break up the info into coaching and take a look at units and carried out distillation on the coaching information whereas we ran evaluations on the take a look at set. Each the coaching set and the take a look at set contained round 200 examples. We assessed the efficiency of a number of fashions, together with the trainer mannequin (Llama 405B), the bottom scholar mannequin (Llama 3B), a vanilla distillation model the place Llama 405B is distilled into Llama 3B with out information augmentation, and a complicated distillation model enhanced with proprietary information augmentation strategies.

The analysis centered on easy and a number of classes outlined within the BFCL V2 dataset. As proven within the following chart, there’s a efficiency variance between the trainer and the bottom scholar mannequin throughout each classes. Vanilla distillation considerably improved the bottom scholar mannequin’s efficiency. Within the easy class, efficiency elevated from 0.478 to 0.783, representing a 63.8% relative enchancment. Within the a number of class, the rating rose from 0.586 to 0.742, which is a 26.6% relative enchancment. On common, vanilla distillation led to a forty five.2% enchancment throughout the 2 classes.

Making use of information augmentation strategies supplied additional beneficial properties past vanilla distillation. Within the easy class, efficiency improved from 0.783 to 0.826, and within the a number of class, from 0.742 to 0.828. On common, this resulted in a 5.8% relative enchancment throughout each classes, calculated because the imply of the relative beneficial properties in every. These outcomes spotlight the effectiveness of each distillation and augmentation methods in enhancing scholar mannequin efficiency for perform name duties.

We present the latency and output velocity comparability for various fashions within the following determine. The information is gathered from Synthetic Evaluation, an internet site that gives impartial evaluation of AI fashions and suppliers, on April 4, 2025. We discover that there’s a clear development on latency and era velocity as totally different measurement Llama fashions evaluated. Notably, the Llama 3.1 8B mannequin provides the very best output velocity, making it probably the most environment friendly when it comes to responsiveness and throughput. Equally, Llama 3.2 3B performs nicely with a barely increased latency however nonetheless maintains a strong output velocity. Alternatively, Llama 3.1 70B and Llama 3.1 405B exhibit a lot increased latencies with considerably decrease output speeds, indicating a considerable efficiency price at increased mannequin sizes. In comparison with Llama 3.1 405B, Llama 3.2 3B offers 72% latency discount and 140% output velocity enchancment. These outcomes recommend that smaller fashions could be extra appropriate for functions the place velocity and responsiveness are essential.

As well as, we report the comparability of price per 1M tokens for various Llama fashions. As proven within the following determine, it’s evident that smaller fashions (Llama 3.2 3B and Llama 3.1 8B) are considerably less expensive. Because the mannequin measurement will increase (Llama 3.1 70B and Llama 3.1 405B), the pricing scales steeply. This dramatic enhance underscores the trade-off between mannequin complexity and operational price.

Actual-world agent functions require LLM fashions that may obtain an excellent stability between accuracy, velocity, and value. This outcome reveals that utilizing a distilled mannequin for agent functions helps builders obtain the velocity and value of smaller fashions whereas getting comparable accuracy as a bigger trainer mannequin.

Conclusion

Amazon Bedrock Mannequin Distillation is now usually accessible, providing organizations a sensible pathway for deploying succesful agent experiences with out compromising on efficiency or cost-efficiency. As our efficiency analysis demonstrates, distilled fashions for perform calling can obtain accuracy corresponding to fashions many occasions their measurement whereas delivering considerably quicker inference and decrease operational prices. This functionality permits scalable deployment of AI brokers that may precisely work together with exterior instruments and techniques throughout enterprise functions.

Begin utilizing Amazon Bedrock Mannequin Distillation immediately by way of the AWS Administration Console or API to rework your generative AI functions, together with agentic use instances, with the stability of accuracy, velocity, and value effectivity. For implementation examples, take a look at our code samples within the amazon-bedrock-samples GitHub repository.

Appendix

BFCL V2 easy class

Definition: The straightforward class consists of duties the place the person is supplied with a single perform documentation (that’s, one JSON perform definition), and the mannequin is predicted to generate precisely one perform name that matches the person’s request. That is probably the most primary and generally encountered situation, specializing in whether or not the mannequin can accurately interpret an easy person question and map it to the one accessible perform, filling within the required parameters as wanted.

BFCL V2 a number of class

Definition: The a number of class presents the mannequin with a person question and several other (usually two to 4) perform documentations. The mannequin should choose probably the most applicable perform to name primarily based on the person’s intent and context after which generate a single perform name accordingly. This class evaluates the mannequin’s capacity to grasp the person’s intent, distinguish between comparable features, and select the very best match from a number of choices.

Concerning the authors

Yanyan Zhang is a Senior Generative AI Information Scientist at Amazon Internet Companies, the place she has been engaged on cutting-edge AI/ML applied sciences as a Generative AI Specialist, serving to prospects use generative AI to realize their desired outcomes. Yanyan graduated from Texas A&M College with a PhD in Electrical Engineering. Outdoors of labor, she loves touring, figuring out, and exploring new issues.

Yanyan Zhang is a Senior Generative AI Information Scientist at Amazon Internet Companies, the place she has been engaged on cutting-edge AI/ML applied sciences as a Generative AI Specialist, serving to prospects use generative AI to realize their desired outcomes. Yanyan graduated from Texas A&M College with a PhD in Electrical Engineering. Outdoors of labor, she loves touring, figuring out, and exploring new issues.

Ishan Singh is a Generative AI Information Scientist at Amazon Internet Companies, the place he helps prospects construct progressive and accountable generative AI options and merchandise. With a powerful background in AI/ML, Ishan focuses on constructing generative AI options that drive enterprise worth. Outdoors of labor, he enjoys enjoying volleyball, exploring native bike trails, and spending time together with his spouse and canine, Beau.

Ishan Singh is a Generative AI Information Scientist at Amazon Internet Companies, the place he helps prospects construct progressive and accountable generative AI options and merchandise. With a powerful background in AI/ML, Ishan focuses on constructing generative AI options that drive enterprise worth. Outdoors of labor, he enjoys enjoying volleyball, exploring native bike trails, and spending time together with his spouse and canine, Beau.

Yijun Tian is an Utilized Scientist II at AWS Agentic AI, the place he focuses on advancing elementary analysis and functions in Massive Language Fashions, Brokers, and Generative AI. Previous to becoming a member of AWS, he obtained his Ph.D. in Laptop Science from the College of Notre Dame.

Yijun Tian is an Utilized Scientist II at AWS Agentic AI, the place he focuses on advancing elementary analysis and functions in Massive Language Fashions, Brokers, and Generative AI. Previous to becoming a member of AWS, he obtained his Ph.D. in Laptop Science from the College of Notre Dame.

Yawei Wang is an Utilized Scientist at AWS Agentic AI, working on the forefront of generative AI applied sciences to construct next-generation AI merchandise inside AWS. He additionally collaborates with AWS enterprise companions to establish and develop machine studying options that handle real-world business challenges.

Yawei Wang is an Utilized Scientist at AWS Agentic AI, working on the forefront of generative AI applied sciences to construct next-generation AI merchandise inside AWS. He additionally collaborates with AWS enterprise companions to establish and develop machine studying options that handle real-world business challenges.

David Yan is a Senior Analysis Engineer at AWS Agentic AI, main efforts in Agent Customization and Optimization. Previous to that, he was in AWS Bedrock, main mannequin distillation effort to assist prospects optimize LLM latency, price and accuracy. His analysis curiosity consists of AI agent, planning and prediction and inference optimization. Earlier than becoming a member of AWS, David labored on planning and conduct prediction for autonomous driving in Waymo. Earlier than that, he labored on nature language understanding for data graph at Google. David obtained a M.S. in Electrical Engineering from Stanford College and a B.S. in Physics from Peking College.

David Yan is a Senior Analysis Engineer at AWS Agentic AI, main efforts in Agent Customization and Optimization. Previous to that, he was in AWS Bedrock, main mannequin distillation effort to assist prospects optimize LLM latency, price and accuracy. His analysis curiosity consists of AI agent, planning and prediction and inference optimization. Earlier than becoming a member of AWS, David labored on planning and conduct prediction for autonomous driving in Waymo. Earlier than that, he labored on nature language understanding for data graph at Google. David obtained a M.S. in Electrical Engineering from Stanford College and a B.S. in Physics from Peking College.

Panpan Xu is a Principal Utilized Scientist at AWS Agentic AI, main a group engaged on Agent Customization and Optimization. Previous to that, she lead a group in AWS Bedrock engaged on analysis and improvement of inference optimization strategies for basis fashions, overlaying modeling degree strategies comparable to mannequin distillation and sparsification to hardware-aware optimization. Her previous analysis curiosity covers a broad vary of matters together with mannequin interpretability, graph neural community, human-in-the-loop AI and interactive information visualization. Previous to becoming a member of AWS, she was a lead analysis scientist at Bosch Analysis and obtained her PhD in pc science from Hong Kong College of Science and Expertise.

Panpan Xu is a Principal Utilized Scientist at AWS Agentic AI, main a group engaged on Agent Customization and Optimization. Previous to that, she lead a group in AWS Bedrock engaged on analysis and improvement of inference optimization strategies for basis fashions, overlaying modeling degree strategies comparable to mannequin distillation and sparsification to hardware-aware optimization. Her previous analysis curiosity covers a broad vary of matters together with mannequin interpretability, graph neural community, human-in-the-loop AI and interactive information visualization. Previous to becoming a member of AWS, she was a lead analysis scientist at Bosch Analysis and obtained her PhD in pc science from Hong Kong College of Science and Expertise.

Shreeya Sharma is a Senior Technical Product Supervisor at AWS, the place she has been engaged on leveraging the facility of generative AI to ship progressive and customer-centric merchandise. Shreeya holds a grasp’s diploma from Duke College. Outdoors of labor, she loves touring, dancing, and singing.

Shreeya Sharma is a Senior Technical Product Supervisor at AWS, the place she has been engaged on leveraging the facility of generative AI to ship progressive and customer-centric merchandise. Shreeya holds a grasp’s diploma from Duke College. Outdoors of labor, she loves touring, dancing, and singing.

Leave feedback about this